Caveats

This information is valid at the time of writing (9.2-6 release).

Currently, the installation of a cluster requires an ethernet interface, configured as “internalnet”. This is needed to generate a valid license file and associated certificates.

Selecting Infiniband-only interfaces in the Bright Installer at the “Head node interfaces” step will likely result in a failed installation. This behavior may be improved in later releases of the Bright installer.

Installation

The Nvidia Bright cluster manager installation may be completed as per the installation manual.

It is recommended the Nvidia Bright installation ISO, downloaded via the Customer Portal, include the OFED packages. This may be done by selecting the “Include OFED and OPA Packages” checkbox under “Additional Options” on the download ISO page.

Recommended configuration options during installation are listed below.

At the network topology step, select a type 2 network

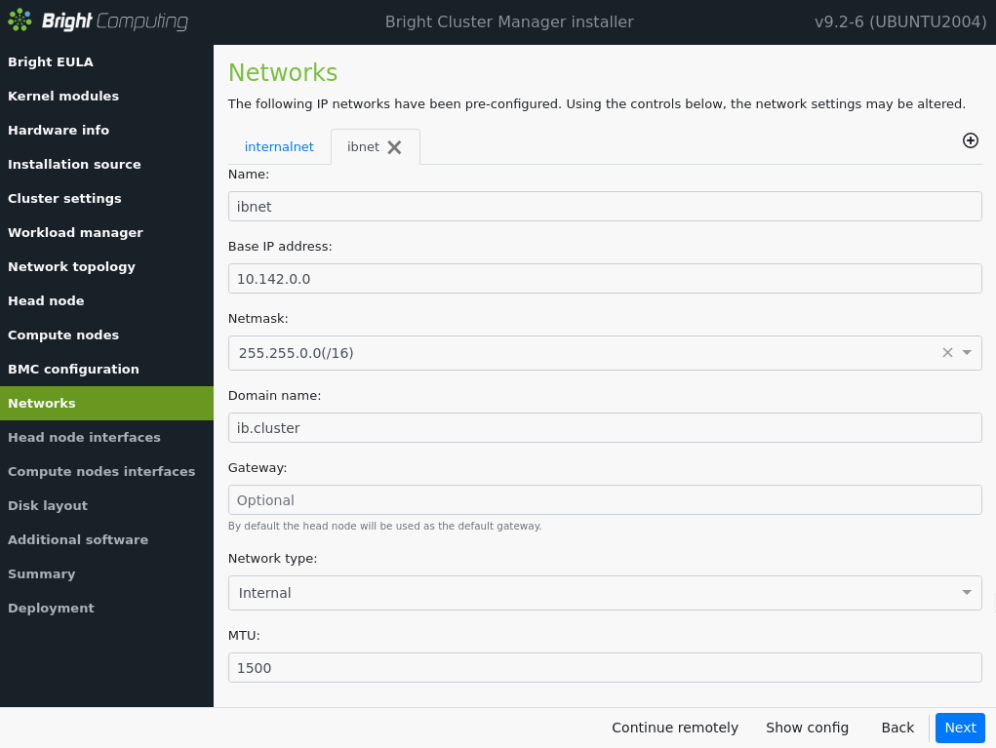

At the “networks” step, we recommend adding an “ibnet” network object.

At the “Head node interfaces” step, configure an ib0 interface as below.

At the “Compute node interfaces” step, configure an ib0 interface as below.

At the “Additional software”step, select the “OFED/OPA stack” checkbox along with the appropriate OFED version.

Post-installation

Once the installation steps have been completed successfully, perform the licensing steps per the installation manual. Licensing (running the request-license command on the primary headnode) must be completed before performing the steps below.

See the section titled “4.3 Requesting And Installing A License Using A Product Key” in the installation manual here for more information: NVIDIA Bright Cluster Manager Documentation

Now, you may modify the configuration and convert the network configuration to Infiniband-only.

Configure ibnet to allow management and node booting.

# cmsh

% network use ibnet

% set nodebooting yes

% set managementallowed yes

% commit

Modify the management network to ibnet in the base partition

% partition use base

% set managementnetwork ibnet

% commit

Configure the management network for the default category to ibnet

% category use default

% set managementnetwork ibnet

% commit

Modify the provisioning interface for the headnode to ib0

% device use master

% set provisioninginterface ib0

% commit

Modify the provisioning interface for the compute node to ib0

% device use node001

% set provisioninginterface ib0

% commit

% interfaces

% remove bootif

% commit

% quit

Reboot the headnode. This is required to update the management interfaces in the various configuration files.

After the headnode reboot, remove the ethernet interface from the headnode as it should no longer be required. In the example below, the ethernet interface is named “eno1”. The ethernet device name may differ on your system.

# cmsh

% device use master

% interfaces

% listType Network device name IP Network Start ifphysical eno1 10.141.255.254 internalnet always

physical ib0 [prov] 10.142.255.254 ibnet always

% remove eno1

% commit

Remove the internalnet network.

% partition use base

% set externalnetwork ibnet

% commit

% network

% use internalnet

% set nodebooting no

% set managementallowed no

% commit

% ..

% remove internalnet

% commit

Reboot the headnode to complete removal of the Ethernet interface.

The cluster is now infiniband only.