1. Prerequisites and Requirements

We will setup the following:

- Kubernetes version:

v1.29.6 - Base Command Manager (BCM) version:

10.24.07 - On Linux OS:

Ubuntu 22.04. - On an air-gapped cluster (no internet access at all)

- Head Nodes cannot be selected as Kubernetes nodes at the time of writing.

The first instructions are to be executed on a host with Internet Access. The following requirements are in place for this machine:

- OS has to be

Ubuntu 22.04(matching the air-gapped system) BCMpackage repositories are in place.airgap-scriptsdirectory taken from BCM version >=10.24.07.- Internet access

For this reason it could be convenient to take a BCM 10.24.07 Virtual Machine.

2. Prepare air-gapped requirements

When the internet machine has the cm-setup package installed, then the scripts can be found here:

/cm/local/apps/cm-setup/lib/python3.9/site-packages/cmsetup/plugins/kubernetes/airgap-scripts/

Otherwise, the scripts need to be copied to the machine beforehand. In following steps we will assume the cm-setup location for these scripts.

- Add the

airgap-scriptsto thePATHenvironment:

export PATH=$PATH:/cm/local/apps/cm-setup/lib/python3.9/site-packages/cmsetup/plugins/kubernetes/airgap-scripts/

- Install

helmto/usr/local/bin/helmvia https://helm.sh/docs/intro/install/ instructions, or via the helper script that we ship withcm-setup:

download_helm_binary.shOptionally, verify thathelmworks:root@internet-host:~# helm version

version.BuildInfo{Version:"v3.15.2", GitCommit:"1a500d5625419a524fdae4b33de351cc4f58ec35", GitTreeState:"clean", GoVersion:"go1.22.4"} - Install

docker

If this is a BCM Head Node, you can runcm-docker-setupand set up docker on the Head Node. Followed by amodule load dockerafter the setup.

Otherwise, follow the instructions from: https://docs.docker.com/engine/install/Test withdocker psif the deployment is successful. If the output looks as follows, we can proceed with the next step:

root@internet-host:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMESPlease note that the docker service might take a few seconds to initialize and start. - Create a working directory, for example

/root/airgapped. And invoke the following scripts within this directory.

mkdir -p airgapped

cd airgapped

download_ubuntu_packages.sh

download_container_images.sh

download_helm_charts.sh

cd ..

tar -czf airgapped.tar airgapped

It’s best to execute them one-by-one to ensure all of them succeed. Note the download scripts all write their output to the current working directory. - Save the

airgapped.tarto some USB stick for example, and make sure we make it available on the active Head Node of the air-gapped cluster.

3. Install air-gapped package requirements

Now that we somehow managed to copy the airgapped.tar file onto the active Head Node of the air-gapped cluster. We can continue with the following steps:

- We extract the file:

tar -xvf airgapped.tarThis should result in anairgappeddirectory, we can change directory to it for the rest of the document, and we will invoke a few scripts from this directory. - We modify our

PATHenvironment here as well, to make some air gap helper scripts available.

export PATH=$PATH:/cm/local/apps/cm-setup/lib/python3.9/site-packages/cmsetup/plugins/kubernetes/airgap-scripts/ - We first install the packages and other binaries on the Head Node(s).

Install helm

Helm is not installed via a package, but is available from theairgappedfolder, a simple copy will suffice.

cp -prv ./packages/helm /usr/local/bin/helm

Install packages

First move out of the way packages you do not wish to install on the Head Node if needed.

pushd packages && apt install ./*.deb && popd

Repeat both for the secondary Head Node if this is an HA BCM cluster. One way to do it is to copy the files to the secondary, and do the same steps. - Then we install the packages and other binaries in the relevant Software Images.

For example, if you wish to use the software image/cm/images/default-image, one option is to:cp -prv packages /cm/images/default-image/tmp/

cm-chroot-sw-img /cm/images/default-image "pushd /tmp/packages && apt install ./*.deb"

rm -rfv /cm/images/default-image/tmp/packagesBefore the apt install part, it’s possible to curate per software image their/tmp/packages. If some of the software images already have their GPU drivers. Thecuda-driverpackage (and related) might be best skipped, so they won’t replace existing drivers.

4. Install docker and docker registry

The following two wizards can be executed because we already pre-installed packages for in the previous section.

cm-container-registry-setup --skip-packages

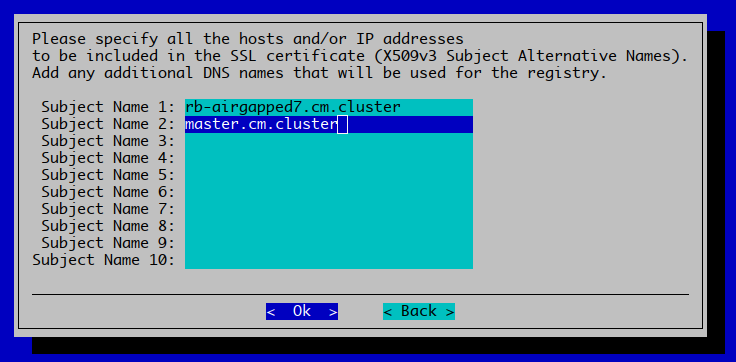

We chose the active Head Node. And we choose a custom domain name, or additional Subject Name: master.cm.cluster Choose Save & Deploy. We’ll use this DNS throughout the rest of the document.

cm-docker-setup --skip-packages

We chose the active Head Node only in our case. Choose Save & Deploy.

Test with docker ps if the deployment is successful. If the output looks as follows, we can proceed with the next step:

root@internet-host:~# docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

Please note that the docker service might take a few seconds to initialize and start properly.

5. Push all the container images and Helm charts

Continuing from the airgapped directory, here we will first push all docker images to the docker registry.

push_container_images.sh

Then we will push the helm charts as well.

push_helm_charts.sh

Please note that additional flags are possible for push_helm_charts.sh (see --help)

6. Generate cm-kubernetes-setup.conf

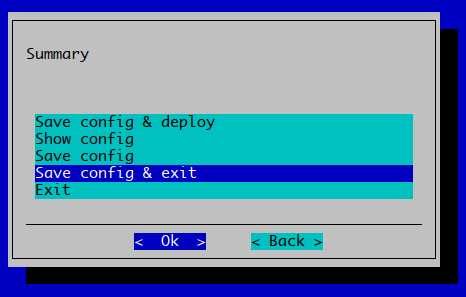

We walk through the entire setup wizard (cm-kubernetes-setup) and once completed, we will choose Save config & Exit, which will allow us to save the cm-kubernetes-setup.conf file to: /root/cm-kubernetes-setup.conf.

We will show all the screens of the wizard next. The main important points to note are:

- Head Nodes cannot be selected as Kubernetes control-plane or worker nodes.

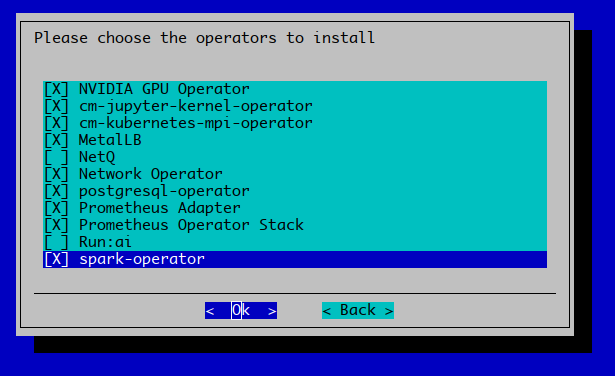

- In the Operators selection screen: NetQ and Run:AI(*) are currently not supported.

- NVAIE operators are not supported either in combination with airgapped.

((*) Note that this Run:AI operator is for the non self-hosted Run:AI version (SaaS), which is not designed for airgapped.)

If this is clear enough, you can also skip ahead to the next section from here.

The complete Kubernetes setup Wizard

Login to the Head Node and execute cm-kubernetes-setup.

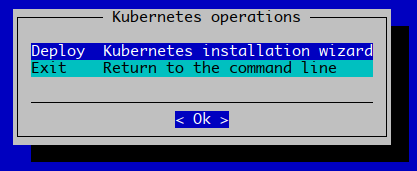

We choose Deploy.

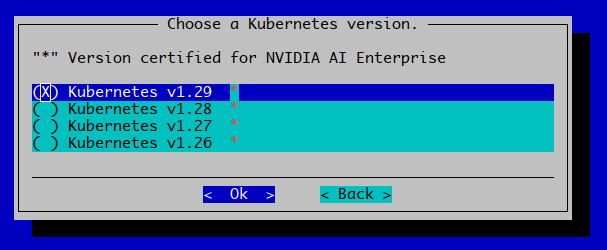

We choose version 1.29 here. In the future we’ll add support for other Kubernetes versions.

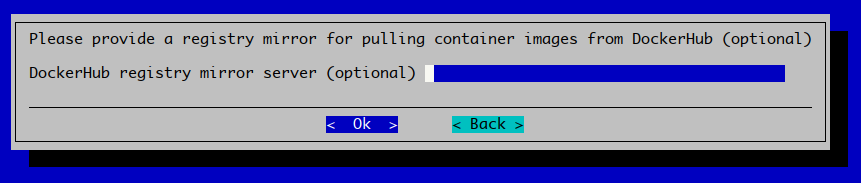

In certain cases, if your company has it, a registry mirror could be configured here. We choose Ok.

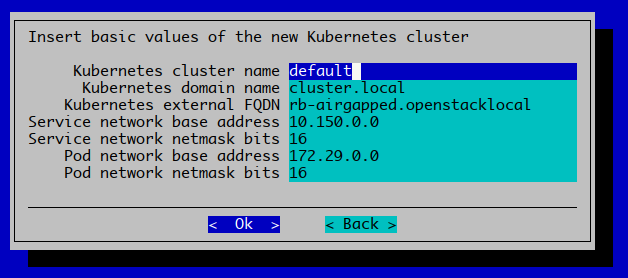

The label of the Kubernetes setup can be changed here, we’ll leave it at default. Typically the FQDN can be set to something more appropriate. Nothing here is specific to airgapped here.

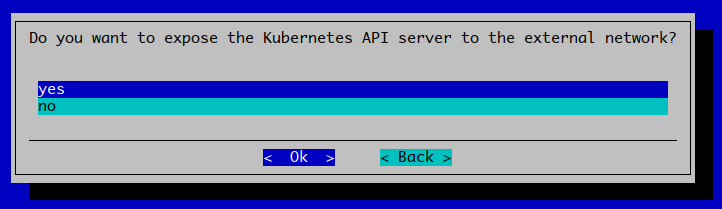

We choose

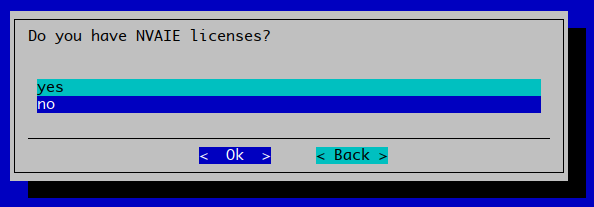

We choose yes here. The value doesn’t matter for airgapped.

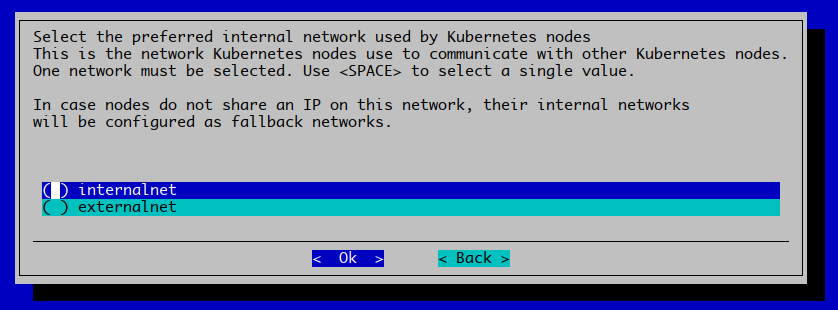

The correct network has to be chosen here. In our example setup, this is the internalnet.

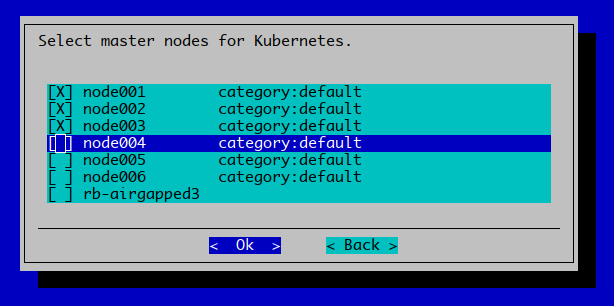

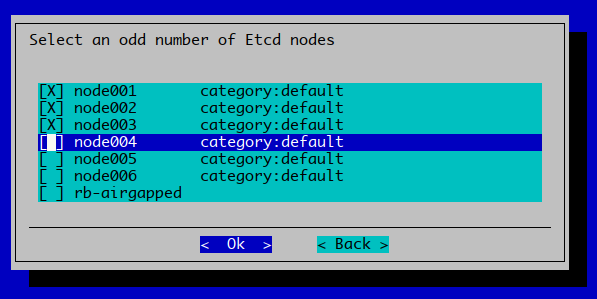

In our example setup we’re choosing node00[1-3] for our Kubernetes control-plane. Please note that choosing Head Nodes is not supported at the time of writing.

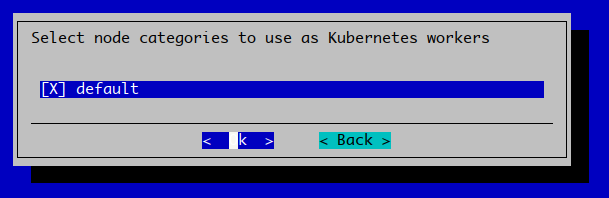

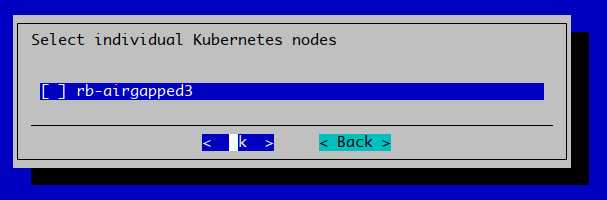

We also choose the default category in our case to become the worker nodes. Since we choose three nodes from this category as control-plane nodes, those will have the control-plane + worker roles, but that means they will effectively only be control-plane nodes. And node00[4-6] will be the workers in our setup.

Please note again, that Head Nodes are not supported as Kubernetes control-plane or worker nodes.

We will setup our three-node Etcd cluster on the nodes that we also designated for the Kubernetes control-plane.

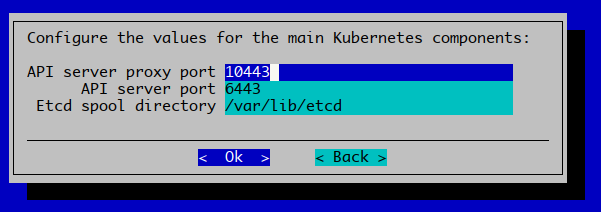

We are not customizing the ports for the Kubernetes API server and proxy (Load Balancer)

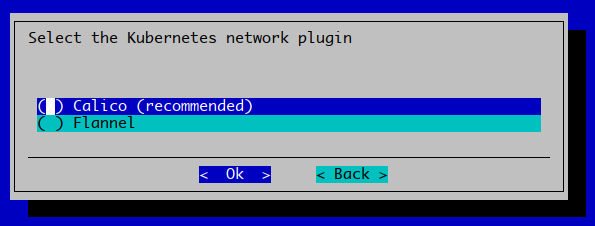

We go ahead with Calico.

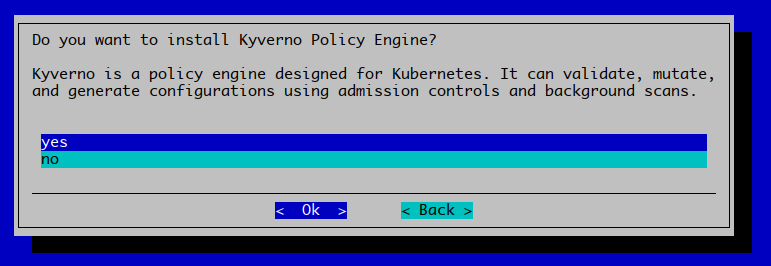

Kyverno is supported with airgapped.

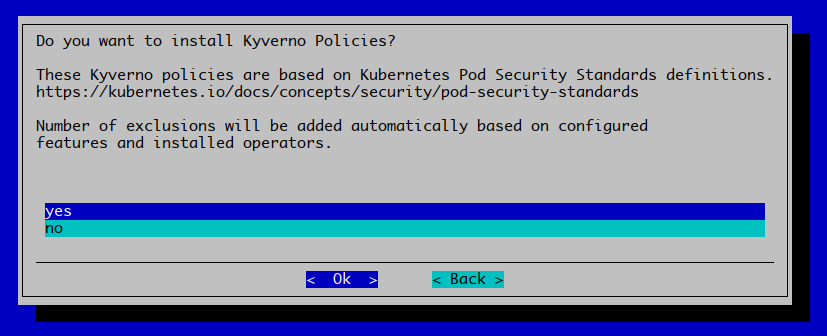

Kyverno policies are as well.

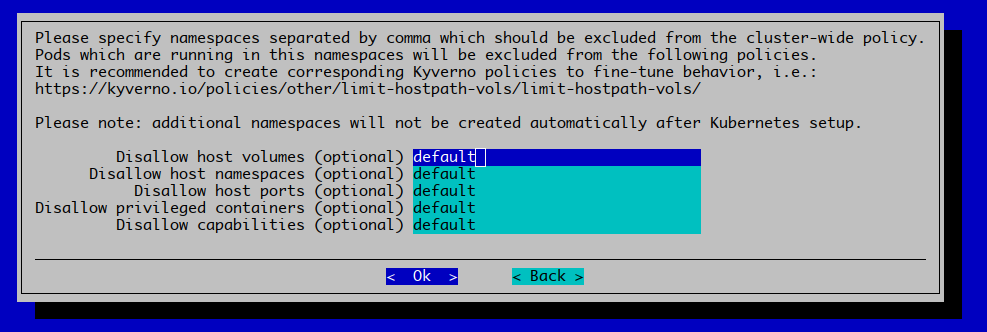

Since we’ve chosen to setup Kyverno, we can already add additional namespaces to be excluded for certain policies.

NVAIE is not supported at the time of writing.

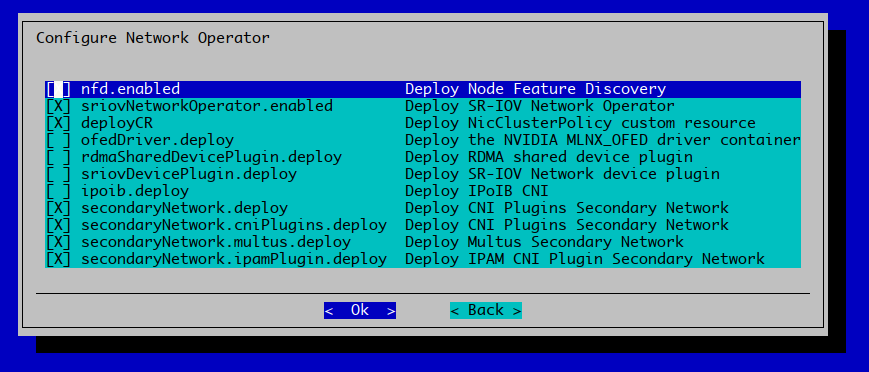

The above list of operators that have been checked are supported in combination with airgapped. As mentioned also at the beginning of this section, NetQ and Run:ai are not supported in combination with airgapped.

These operators are optional, but since for our setup we’ve selected them, we will get additional follow-up screens in the wizard, with additional customization options.

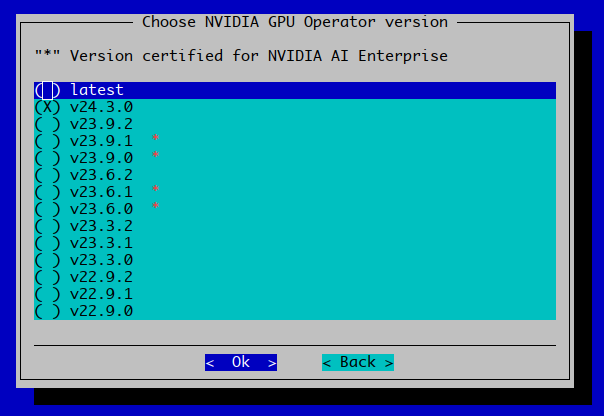

Please note that v24.3.0 is the only supported version out of the box, in combination with air-gapped. With manual effort a different version can be installed if necessary.

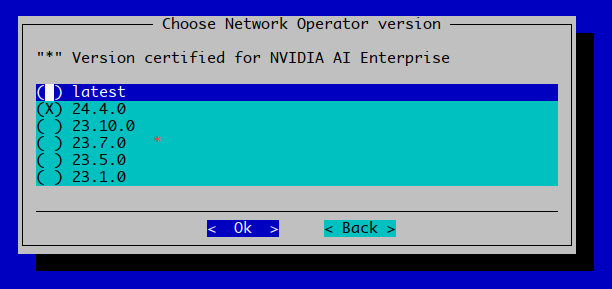

Please note that v24.4.0 is the only supported version out of the box, in combination with air-gapped. With manual effort a different version can be installed if necessary.

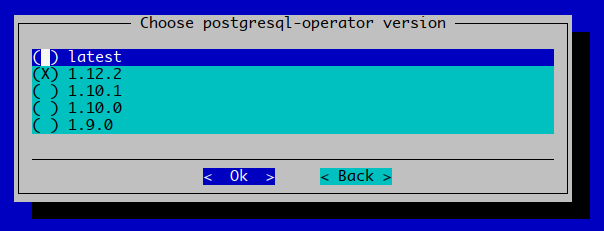

Please note that 1.12.2 is the only supported version out of the box, in combination with air-gapped. With manual effort a different version can be installed if necessary.

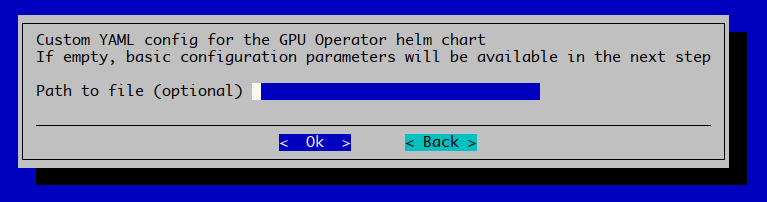

We chose Ok here.

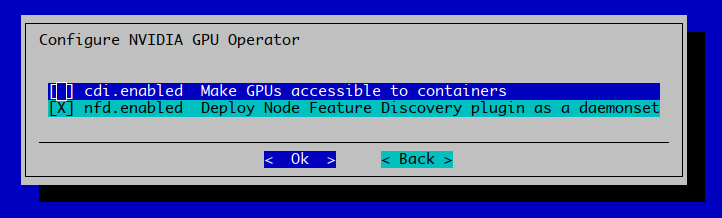

We did not make any changes and chose Ok here.

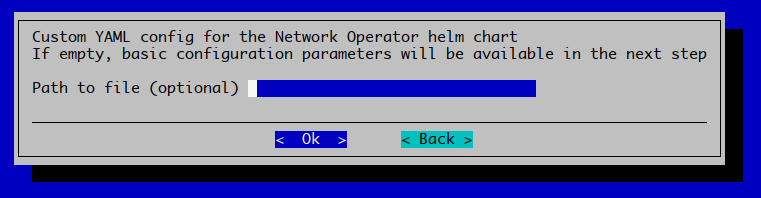

We chose Ok here.

We changed nothing and chose Ok here.

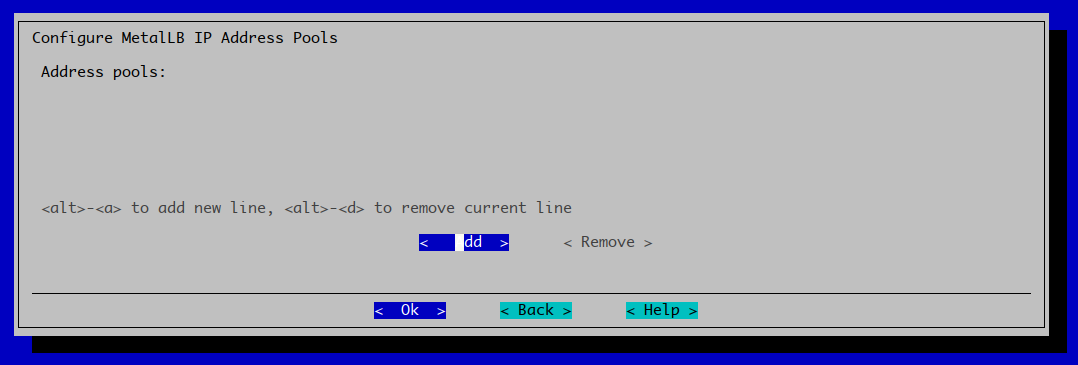

We did not add any address pools for MetalLB at this point, and chose Ok.

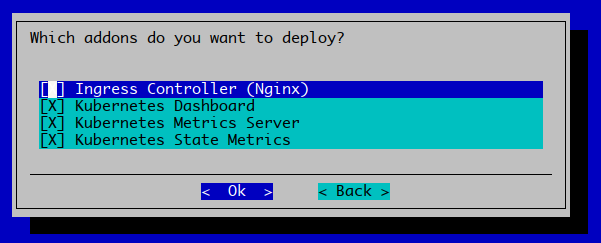

We chose to select all addons and chose Ok. They are optional, but all recommended.

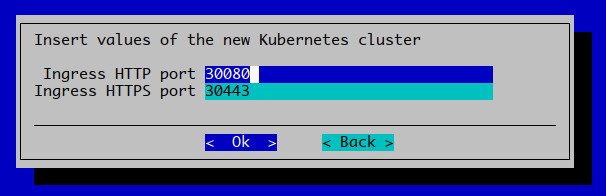

We kept the defaults for the Ingress ports and chose Ok.

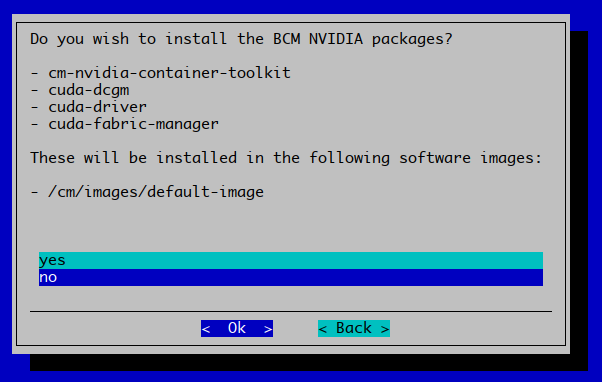

We chose no here, but in principle it shouldn’t matter, the installation of packages is dealt with separately during airgapped installations. We already pre-installed the packages, and whether these were part of that was a choice that has already been made.

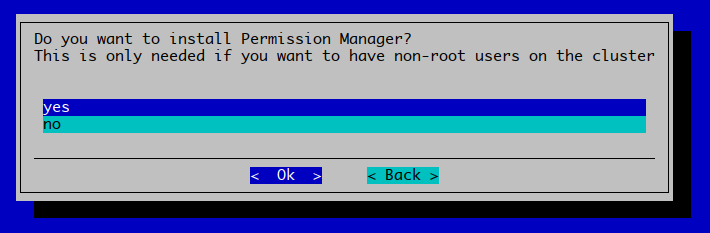

We choose yes here.

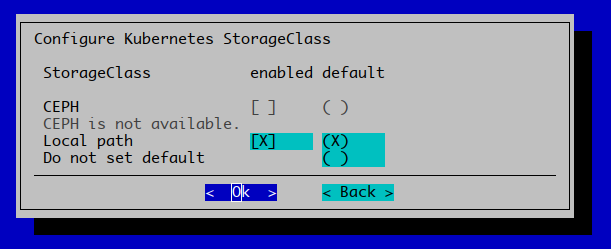

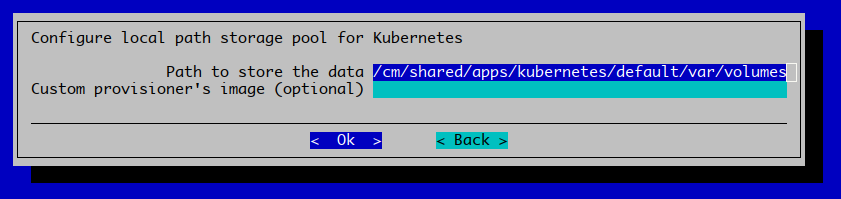

Local path storage is supported, we kept all defaults and chose Ok.

We kept all the defaults and chose Ok.

Finally, this is important, we have to choose Save config & exit. Because we need to insert some airgapped-specific settings into the config, before we execute the setup with it.

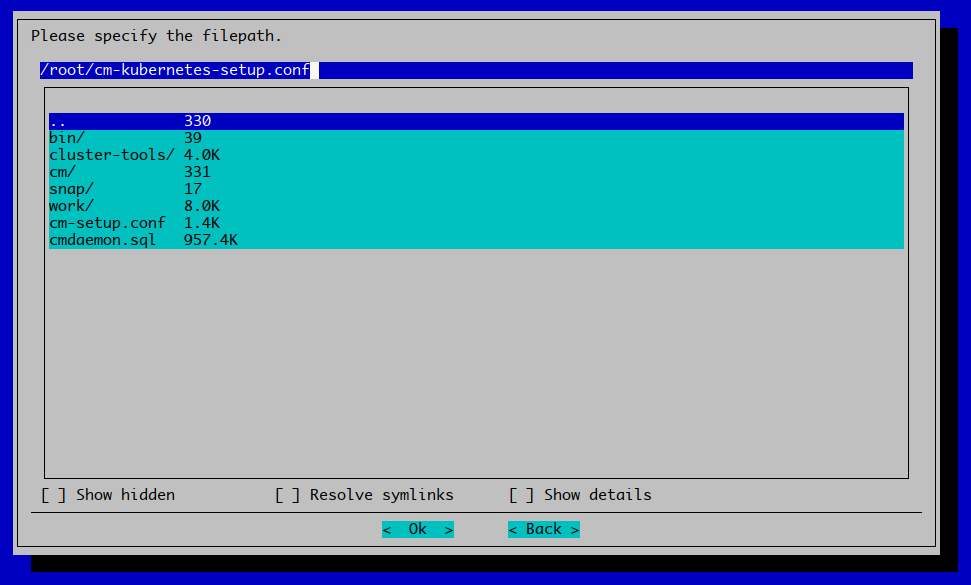

This should be the final screen where the config file is saved. We keep this as

This should be the final screen where the config file is saved. We keep this as /root/cm-kubernetes-setup.conf

Please note, that the setup will not execute, and the wizard will show how the setup can be executed, but before doing that. Please do the next section first!

7. Prepare config for air gapped

Pick your favorite editor and open /root/cm-kubernetes-setup.conf (the file we just generated). Then find this section:

modules: kubernetes: airgap: helm: ca: '' repo: '' registry: ''

And change it to:

modules: kubernetes: airgap: helm: ca: '/cm/local/apps/containerd/var/etc/certs.d/master.cm.cluster:5000/ca.crt' repo: 'oci://master.cm.cluster:5000/helm-charts' registry: 'master.cm.cluster:5000'

8. Deploy Kubernetes

Now finally:

cm-kubernetes-setup -v -c /root/cm-kubernetes-setup.conf

Should result in a working cluster.